Scaling simulation

February 11, 2021 | 7 min. read

At Aurora, we build the right tools for the right problems. Here’s how we’re auto-generating the hundreds of thousands of virtual tests needed to rapidly develop and deploy the Aurora Driver.

Simulation is at the core of development at Aurora.

Simulation scenarios* allow us to understand how new autonomy features perform in thousands of scenes, beyond what we’re able to experience on the test track or the road. This massively accelerates development on the Aurora Driver. For example, we built hundreds of simulation scenarios that helped us develop software for our autonomous trucks before our physical trucks were fully assembled.

Even more important, simulation enables us to make fast progress safely. Simulation scenarios are specifically designed to help us find edge cases and catch errors in our software early, well before it’s loaded onto our vehicles. And we can be more confident that the Aurora Driver will perform new maneuvers safely on the road when it has effectively already practiced them millions of times in simulation. In fact, the Aurora Driver performed 2.27 million unprotected left turns in simulation before even attempting one in the real world.

But reaping these benefits requires a lot of scenarios–potentially as many as hundreds of thousands for a single operational area–made quickly and efficiently. Today, we’re introducing our approach to procedural scenario generation, the process of auto-generating the massive number of scenarios we need at scale.

*We refer to each unique simulation test as a scenario. A scenario consists of the initial conditions of the scene, like the position of the Aurora Driver, the positions of other vehicles, and the validation criteria that determine whether the Aurora Driver passes or fails the test.

When to include humans in the loop

Our scenario generation engineers are to autonomy developers what a Tour de France mechanic is to their partner cyclist. These teams work in a tight feedback loop to create curated scenarios that answer the highest priority questions about the performance of the Aurora Driver. Many aspects of these scenarios are manually adjusted and reviewed, including the map location and the speed and movement paths of surrounding vehicles.

The clear advantage of curated scenarios is that creators can leverage their judgment throughout the process to target specific testing conditions and build thoughtful validations. The result is scenarios that provide high-quality feedback to autonomy developers.

In the example below, the line of “ghost pedestrians” moving through the crosswalk illustrates how we might vary the velocity of a pedestrian in a scenario. In each variation of this scene, the Aurora Driver must both properly yield to the pedestrian in the crosswalk and safely execute (or correctly choose not to execute) an unprotected left turn. Nuanced validation is critical to getting a complete picture of the Aurora Driver’s performance, so our scenario creators manually set and adjust various pass/fail criteria such as acceleration comfort limits and which vehicles it should yield to throughout the maneuver.

Procedural generation: Aurora’s approach to scaling simulation

The downside of curated scenarios is made clear when you begin to scale toward coverage of an operational environment. While an individual scenario set has a fast turnaround time, building scenarios by hand scales linearly with the number of scenarios we need to build. This presents a challenge because we need hundreds of thousands of scenarios to make assertions about the Aurora Driver’s performance. It would require a lot of time and effort to prevent simulation from becoming a blocker.

Fortunately, while some of the scenarios required for each operational area require hand-tuning and nuanced validation, a large proportion don’t. We prefer to build the right tools to solve the right problems, so instead of asking team members to manually generate more scenarios, we’re working on building machines that can generate them automatically. More specifically, we’re building tools for procedural generation.

Procedural generation is used across industries for any application that can use algorithmic execution to generate data. A human user provides input up front to create a procedural recipe, and the tooling follows that recipe to generate a specified number of outputs based on the provided parameters. Put simply, the human effort required to generate one output is exactly the same as it is to generate one million. Additionally, updating or extending the recipe only needs to be done once, rather than having to update a large set of data products one by one. By applying procedural generation to simulation, we can create scenarios at the massive scale needed to rapidly develop and deploy the Aurora Driver.

Lessons from the big screen

In movies, procedural generation is crucial for creating visual effects (VFX) like explosions and Spiderman’s webs. Once the director settles on a particular “look,” VFX artists can often use the same procedural setup to quickly apply the effect to multiple scenes in the movie. Several members of Aurora’s simulation team previously held jobs as visual effects artists and technical directors, and we saw a clear opportunity to use the same procedural techniques from the film industry to scale simulation.

At Aurora, we build procedural workflows to quickly auto-generate large numbers of scenarios representing an operational area. Each workflow is a cascading series of filters and rules capable of producing one or several variations meeting the specified parameters. For example, after we filter our map data to a specific location(s), rules may specify particular maneuver types, actor types (such as vehicles and pedestrians), and a range of actor densities (e.g., sparse to dense traffic).

The following is an illustration of what the process looks like behind the scenes:

We’ve built our system to be both flexible and extensible. First, our modular design allows us to easily update components to incorporate new features or autonomy capabilities. Second, our tooling is map agnostic, meaning that if we have extensive map updates or begin mapping new areas, we can quickly generate new scenarios using the same procedures. Finally, as the Aurora Driver is built to operate many different types of vehicle platforms, our system prioritizes easy generation of scenarios with any of our existing platform types (Class 8 trucks, sedans, etc.). Again, our modular design allows us to easily add new vehicle platforms as soon as they become available.

Having covered the basics, let’s walk through a full #EndToEnd example.

Case study: 100k highway scenarios in just a few hours

Our tools are capable of generating scenarios across a breadth of operational environments, but we’ll narrow our scope so we can dive deep into generation of a single capability: highway merges.

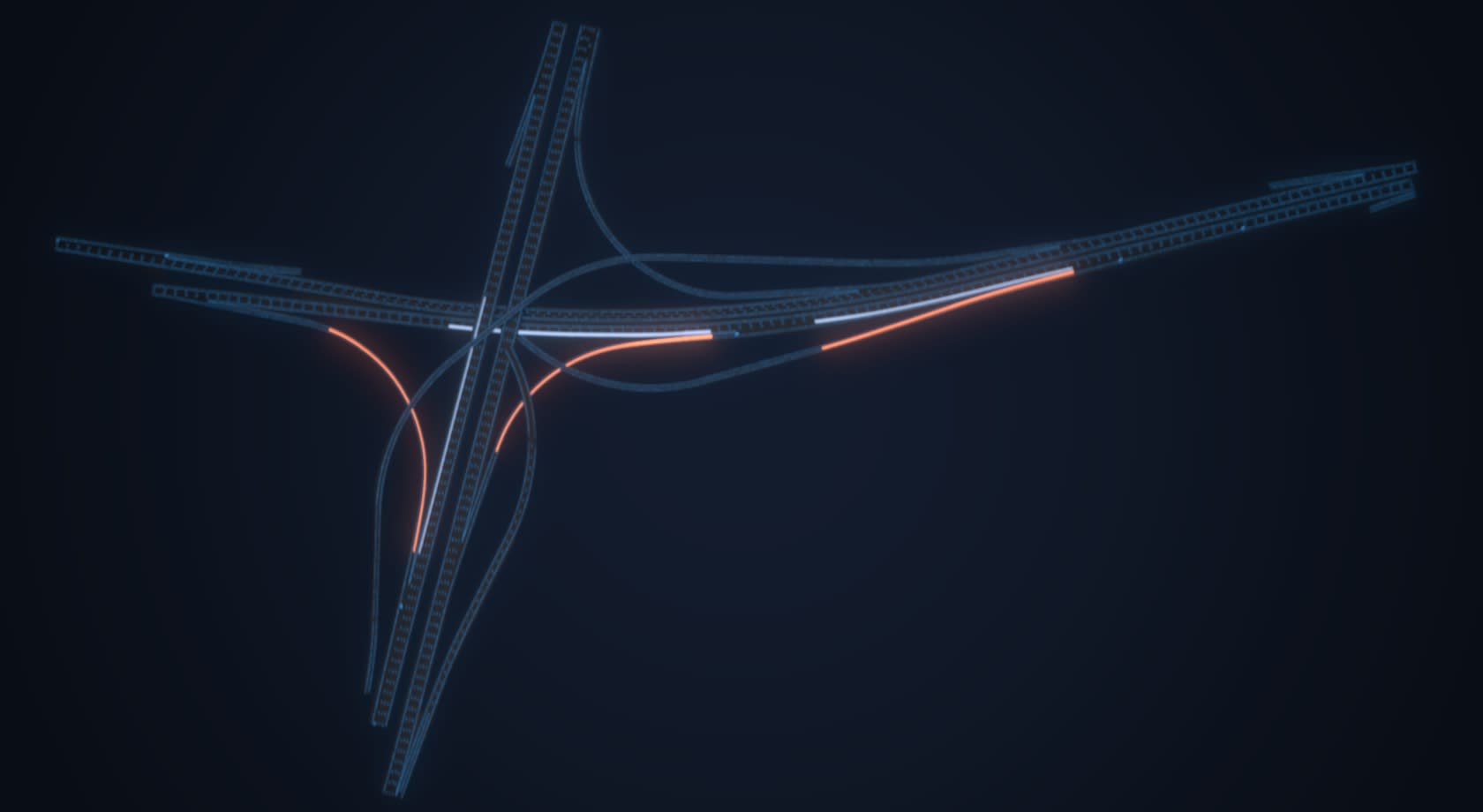

Below is an overview of the procedural workflow that we developed, zoomed out to give a sense of the actual tool. Did we mention these can be complicated?

Zoomed out node network for procedural generation of highway scenarios.

The following sections will provide an idea of the workflow and highlight some of the key parameters used to drive the results.

Importing and processing map data

The natural starting point is map data. Determining the relevant operational environment, vehicle maneuvers, or even actor behaviors are all dependent on where the Aurora Driver is and where it intends to go. In our workflow, users specify the map data that matches their use case. For example, they may want to load all the map data for a specific city, or only one specific set of routes. Our example will walk through the generation of a single scenario along a single route, shown in the image below.

The route where we’ll generate the scenarios for our example.

Once the raw map data is loaded into our tooling, we process the data to transform it into a format that we can more easily work within the procedural network. For example, we construct filters to find regions of interest—map features worth extra attention. Map topology (e.g., four-lane highway), route data (e.g., areas where we know the Aurora Driver will have to perform a specific maneuver), or autonomy capabilities (e.g., unprotected turns) are all examples of features that might create a region of interest.

The regions of interest in our example are potential highway merge locations. To find optimal map areas, we consider features like the length of the merge lane, downstream map features (e.g., is the merge going to result in changing road type?), the intended speed limit of the merge lane, etc.

A portion of our map with all regions of interest (merge locations) marked with orange circles.

Aurora Driver position and route

Now that we’ve identified appropriate locations in the map for scenarios, we place the Aurora Driver, piloting one of the specified vehicle platforms, in the scene. We generate all potential Aurora Driver positions and routes that satisfy a user-defined set of constraints, guaranteeing that we generate the desired types of scenarios. In our example, our target Aurora Driver start locations are high-speed merges where the speed limit is greater than 50 mph. To achieve this, the procedural workflow has the following rules:

-

Start location has to be on a lane leading into a merge

-

Start location has to be within 250-500 meters from a merge

-

Start location has to be on a road where the speed limit is above 50 mph

Merging is an interesting maneuver because the right of way changes depending on the placement of the Aurora Driver. That means that for each merge point identified, we can plan to generate two different types of scenarios:

-

One where the Aurora Driver merges into another lane

-

One where the Aurora Driver reacts to other actors merging into its own lane

This image indicates areas of potential Aurora Driver start locations fulfilling the criteria above. Areas leading into a merge (marked with circles in the previous visual). Areas where the Aurora Driver would be expected to merge are marked in orange and areas where the Aurora Driver would react to other vehicles merging into its lane are marked in blue.

At this point, we’ve successfully filtered our map to sections of highway where we could place the Aurora Driver at the outset of a merge scenario. We’ll generate several sets of variations by placing the Aurora Driver at different locations within each of these areas. Next, we will look at ways we can configure actors (such as bicyclists, vehicles, and pedestrians) along the Aurora Driver’s route.

Actor position, route, and properties

This is an incredibly complex topic because the auto-generation rules and parameters for a given scenario can vary depending on the type of scenario. For example, unprotected left turns in intersections are obviously different from high-speed lane changes. But generally, we write rules that vary:

-

Actor Type: vehicle, motorcyclist, tractor-trailer, bus, etc.

-

Actor Position: start position, trajectory, and velocity

-

Driving Behavior: following space, willingness to give way, etc.

-

Actor Density: how many actors are in the scene

The actual placement and trajectories of actors are designed to mimic real-life observed patterns and create yet another opportunity for variation. For example, we might model a range of merge responses from other vehicles from “friendly” (maintain a comfortable distance) to “aggressive” (cut-ins).

Results

Our procedural scenario generation engine is the valuable product of this work. The system architecture allows us to swap modular components to generate autonomy-specific capabilities and to change the models for actor density and properties.

Our work in this space is still in progress, but the results so far are exciting. For this small example, we generated over one hundred thousand scenarios in a handful of hours to cover a new operational environment. This is orders of magnitude faster than human creators, and generation times will continue to decrease as we scale our cloud resources. Below are just some of the variations we generated. Notice how each one varies the Aurora Driver platform type, traffic density, and the placement of other actors (bicyclists, passing vehicles, etc.).

Aurora is building a tool chest of testing infrastructure to drive development forward. After we’ve put into place the appropriate data to configure a new vehicle platform, a new operational area, or new partnership requirements, we can automatically generate test coverage and put valuable feedback in the hands of developers. This work is key to developing and deploying the Aurora Driver safely, quickly, and broadly.

Jessie Smith and Viktor Lundqvist

Simulation