The offline executor: Virtual testing and the Aurora Driver

August 20, 2019 | 5 min. read

Our approach to simulation reflects our design philosophy—we invest time to create tools expressly for self-driving cars, increasing operational safety down the road.

There’s a lot of talk in our industry about how many miles companies have driven on the road. While there is value in real-world miles, we believe the safest and most efficient way to deliver self-driving technology is by incorporating strong virtual testing capabilities into our development.

At Aurora, we invest time in building smart tools and processes that enable us to quickly accelerate development, all while keeping safety at the forefront. Our simulation work is no exception. Unlike many in the industry, we’ve taken the time to develop our own solution to analyze system performance through simulated autonomous driving scenarios, rather than rely on game engines or other pre-built software. We call this solution the offline executor.

The value of virtual testing

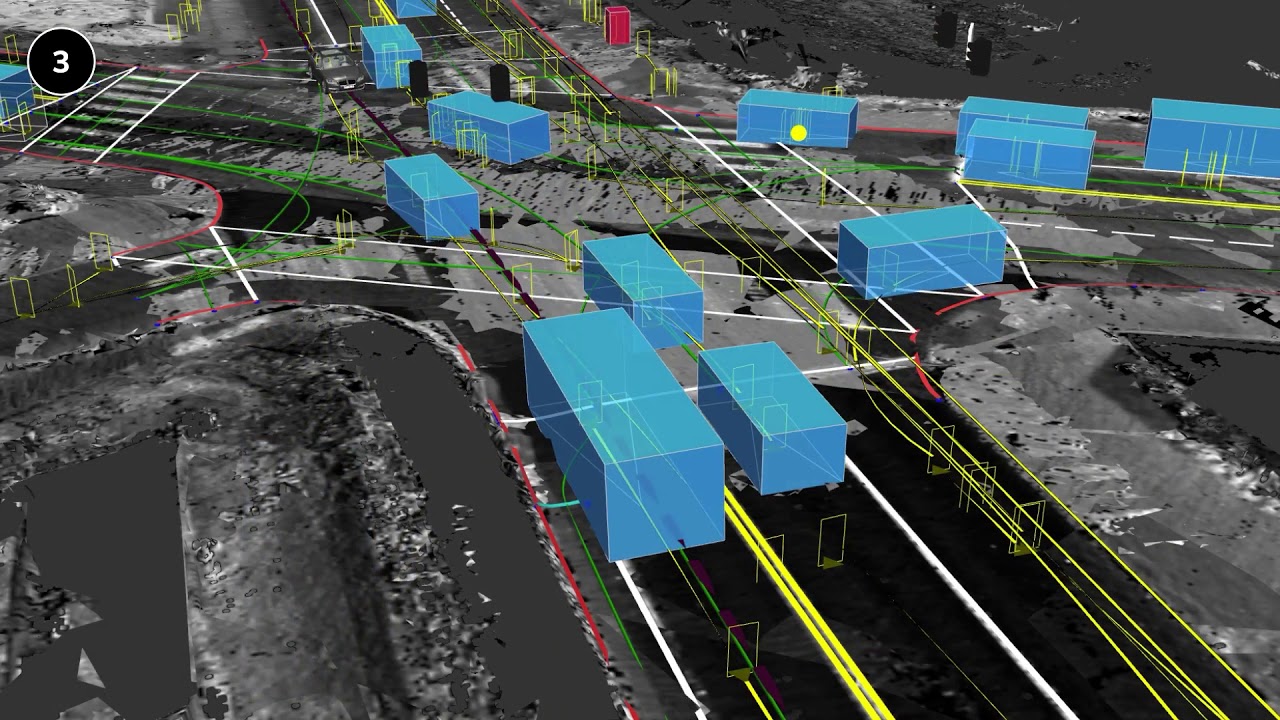

This is an example of a simulation with many permutations of a crowd of pedestrians crossing the street.

Virtual testing provides repeatable measures of performance, speeds development, and lowers the risk inherent to real-world driving activities. Simulation is one example of our virtual testing.

For example, if we’re working on the way our software handles pedestrian crosswalks, we can pull from our database of interactions for each occasion where our Driver encountered a pedestrian at a crosswalk. Then, in simulation, we can replay those interactions and evaluate how the new code would handle not only this situation, but myriad permutations of it. We can change the parameters of the encounter: Are there two adult pedestrians? An adult and a child? Is it a group of pedestrians? This allows us to test the Driver against a diverse set of cases without needing to drive this scenario repeatedly in the real world, hoping we encounter all of the interesting variations we care about.

The vast majority of our simulation experiments are short, and focus on specific interactions, allowing us to efficiently cover a huge number of effective testing miles.

The concept of determinism

Adapting game engines or other applications to create simulated environments for self-driving vehicle software to navigate is effective in the short term—it gets testing operations up quickly. But this approach has an important drawback, which involves the concept of determinism.

In simulation, a test that’s deterministic is one that, given the same environmental inputs, provides the same result. No randomness is involved. Game engines can be remarkable pieces of software and we’ve been able to make use of their technology in the design and preparation of individual elements for our simulations. But they’re not built specifically for self-driving car testing. They often don’t run in lock-step with the autonomy software leading to results that are not deterministic. Given the same sensor inputs, simulations based on game engines will not necessarily provide the same test result.

That’s tricky for simulation testing because it introduces a level of uncertainty. Simulation testing aims to verify the robustness of new software code. Did the test fail because of the new code? Or did it have something to do with the non-determinism of the simulation environment? When it’s based on game engines, it’s difficult to be certain. With our systems, the simulation and autonomy software move in lock step — removing the uncertainty.

Aurora’s offline executor

We’ve engineered a tool built specifically for the validation of self-driving car software—the offline executor—and it’s crucial to our simulation efforts. It uses the same programming framework as the Aurora Driver software stack, and the same libraries. In fact, because it is purpose-built to work with our system, the offline executor allows us to have deterministic offline testing for any module, for any team, across the entire organization. That’s a huge benefit. Otherwise, integrating game engines to work with self-driving software can sometimes feel like putting a square peg into a round hole.

With our offline executor, we don’t have to spend a huge amount of effort integrating the simulation environment with the rest of our system. Instead, we have a system designed from the ground up that enables lock-step execution of self-driving software and the simulation it interacts with. We can then test the same software that runs on our vehicles at massive, cloud-scale including detailed simulation of latencies and compute delays that happen on real-world hardware.

When we build a new capability, the first step is to build the simulations which feature the interaction we’re developing. Next, we write the code, and then we run the code through the simulations using the offline executor.

Using the offline executor streamlines our development process by employing software modules in a manner similar to the overall self-driving stack. For example, when testing an element of the motion-planning module, the offline executor’s simulation module feeds the planner the set of inputs it otherwise would get from the perception module. These are synthesized inputs, but the motion-planning module can’t tell the difference. That means the system reacts in the same way to a simulated environment as it would in the real world — which is crucial to making reliable autonomy software.

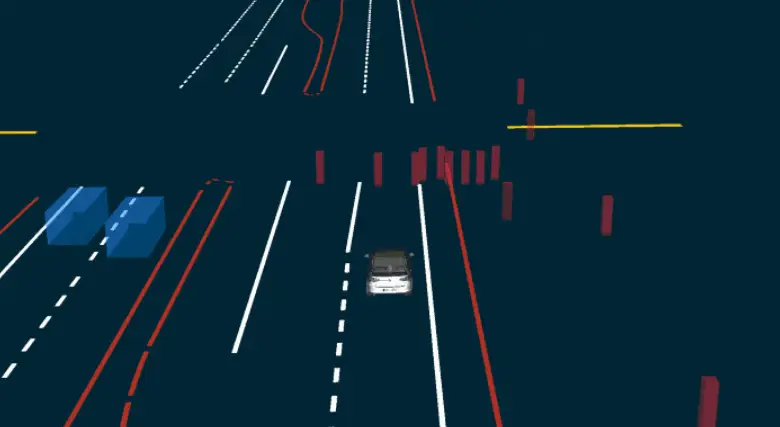

Here’s an example: The simulation module feeds the motion-planning module the knowledge that a car is passing in the right lane, and a pedestrian is traversing a crosswalk up ahead.

Based on these inputs, the motion-planning module will calculate a trajectory — the vehicle’s intended course over the next few seconds. That output goes to the validation module. The validator’s job is to decide whether the trajectory is a good one. Did the motion-planning module do the right thing? The validation module evaluates the trajectory by asking a series of questions. Did the trajectory obey the law? Did the motion planning module meet its objective? Is this trajectory comfortable for vehicle occupants? If the motion-planning module’s trajectory passes all such tests, it passes the interaction.

Test-driven development

Using test-driven development means we build a set of simulations to test a capability before we even write the code to implement that capability. For example, take an unprotected left turn. We create simulations and at first, all of the simulations usually fail—these are progression simulations. As we start to add code to implement the capability, we continue to test it against the simulations and more and more tests start to pass. We set a bar, and once our software passes that bar, we’re ready to hit the road.

This approach has multiple advantages. For one, there is a clear safety advantage to testing and validating our code before it’s on the road. It also empowers our engineers. They’re able to get feedback more quickly as they write the code, which enables them to move faster, without sacrificing safety. It also allows them to think outside the box; simulation acts as a safety net—allowing our engineers to be creative in their code because they’re confident it will be tested many times in simulation before it’s out in the world.

In order to enable this approach when it comes to perception modules, we need to simulate sensor data. To do this, we use the offline executor to create a simulation module that feeds the perception module the data it otherwise would get from its sensors—the point clouds from the LIDAR, the pixels of the camera, returns from radar. Using synthesized data, perception makes its conclusions about the world around it. The output of this process is a set of predictions about what the car sees—this set of returns is a parked car. This set of data is a cyclist. And so on. In the real world, these outputs would be fed to the motion-planning module. In simulation tests, we capture these outputs and feed them into the perception validation pipeline, which makes conclusions about the correctness of the perception module. This allows us the option to test the perception system separately from the motion planning system, which means we can validate the parts individually before bringing them together. The offline executor also allows simulation testing in which the perception and motion planning modules run together. All of which adds up to a flexible and safe way of validating the system with the offline executor.

Delivering a safe and robust Aurora Driver

Before we conclude, it’s important to ask: as we develop the Aurora Driver, where does on-road testing fit in?

Rather than a forum for new development, we treat real-world testing as a mechanism for validating and improving the fidelity of our more rapid virtual testing. Road time is also useful as a way to collect data concerning how expert human drivers navigate complex scenarios.

This strategy has allowed us to contain the size of our on-road testing fleet. With safety in mind, we limit the distance our test vehicles travel by pursuing mileage quality over quantity; that is, we seek out interesting miles rather than just pursuing large quantities of miles.

So whether it’s simulation, on-road testing, or other development, we take pride in an uncompromising and rigorous development process designed expressly for delivering a safe and robust Aurora Driver. While it can be tempting to use pre-existing solutions because they can save time in the short run, we know investing the effort now will pay huge dividends in the future.

Aurora is delivering the benefits of self-driving technology safely, quickly, and broadly. We’re looking for talented people to join our team.

Delivering the benefits of self-driving technology safely, quickly, and broadly.