To safely power autonomous semitrucks at high speeds on highways, the Aurora Driver must accurately and reliably perceive objects and vehicles at great distances. To do so, the Aurora Driver is equipped with sensor pods made up of ultra-high-definition cameras, imaging radar, and our proprietary FirstLight lidar. Data from each of these sensors must be processed and fused into a holistic and dynamic picture of the truck’s environment.

However, long-range perception models can be sensitive to miscalibration between sensors. At distances upward of several hundred meters—only a few seconds away at highway speeds—a small miscalibration of a few milliradians can result in the offset of a full highway lane. This can lead to false negatives or mislocalized detections.

To ensure that our sensors are accurately calibrated when a vehicle leaves on a mission, we use well-established calibration techniques at our terminals. Fiducials (checkered boards) are placed around the vehicle and the Aurora Driver’s cameras and lidar sensors capture them from various angles. Our calibration system then aligns and optimizes that sensor data to find the relative pose of each fiducial. This is done for all camera-lidar pairs on the vehicle to obtain a consistent calibration for the entire sensor suite, enabling seamless image-lidar fusion.

Despite this rigorous calibration process and the high level of ruggedization of our sensor pods, miscalibration caused by mechanical fatigue, thermal drift, or even impacts with flying debris is always possible during the truck’s long journey.

Enter online calibration

To address this issue, we’ve developed an online calibration system. Just as the human brain constantly processes and aligns binocular visual information to focus on a single point and create a seamless representation of the world, the Aurora Driver is able to detect and measure miscalibrations and recalibrate while on the road, in the wild, without fiducials.

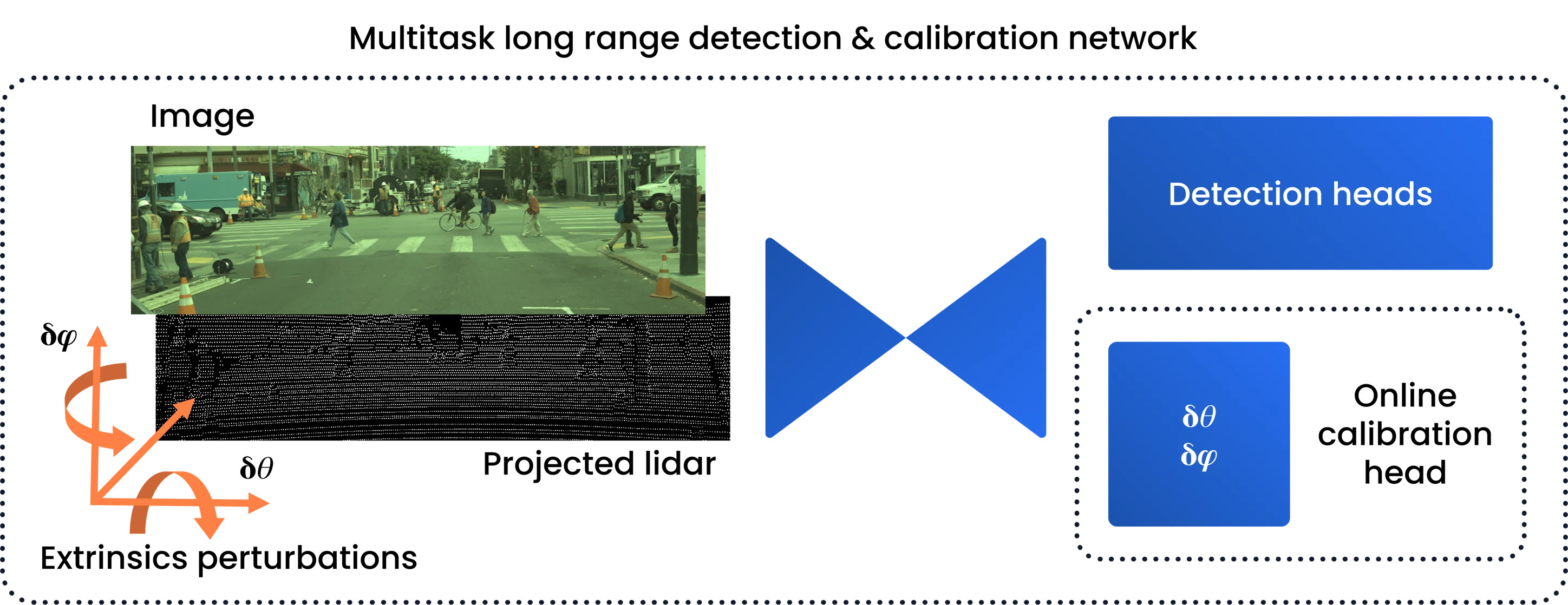

Previous work has shown that this online calibration capability is possible, but so far such systems have been implemented as dedicated models that are computationally heavy, requiring additional onboard GPU resources. We decided to instead train our online calibration system as an auxiliary output to our existing long-range detection model. The implementation of this lightweight regression head is substantially less computationally intensive than a dedicated model because our online calibration system simply makes use of the lidar and camera data that our long-range detection model is already digesting.

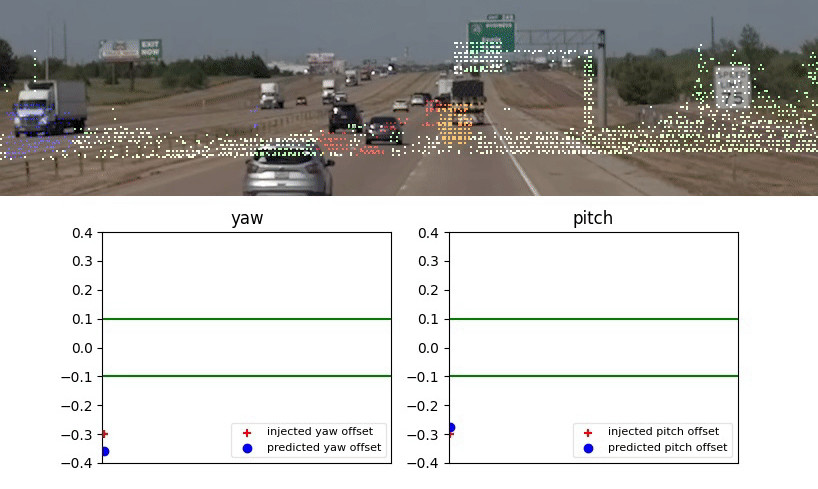

By injecting known amounts of noise into well-calibrated camera and lidar extrinsics and training our model to regress that noise value as an auxiliary task, we were able to produce a long-range detector model that is both capable of performing online calibration and much more robust to miscalibrated input data. The gif below shows the system in action, where we artificially miscalibrated our long-range lidar by significant amounts in both pitch and yaw (red curve). We can see that the model is able to accurately regress that miscalibration in real-time (<100ms), with an average absolute error of under 5% of the injected noise values, keeping calibration well within our tolerated range.

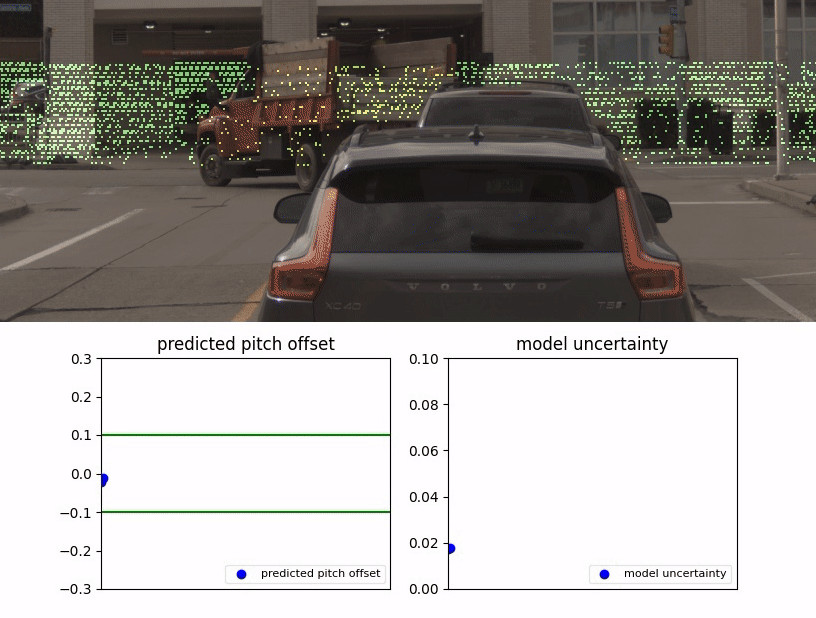

In some situations, sensor data may not contain enough information for the model to confidently estimate the lidar/image calibration. To prevent the system from flagging such cases as miscalibration, we trained the model to estimate this observation noise, or aleatoric heteroscedastic uncertainty. In the example below, we can see that the autonomous vehicle makes a sharp 90-degree turn in close proximity to a building, which results in a featureless image with few lidar returns. The model’s estimated uncertainty spikes when this happens, letting us know that the estimated calibration can be ignored.

In addition to accurately predicting sensor miscalibration and maintaining performance on its original detection task, the multitask model also significantly improves performance of detection of small objects at long distances, as shown in the table below. This allows the Aurora Driver to see construction zones or pedestrians on the road earlier, giving more time for the motion planner to make a safe decision.

We also use this system to generate post-mission reports that summarize the calibration status of collected data. These reports feed into our data validation system to help us filter out miscalibrated data before it is ingested by our labeling engine.

Putting it all together

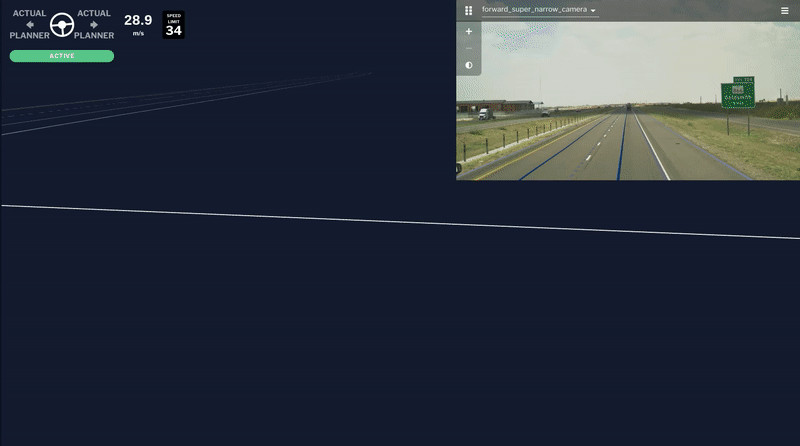

Using this approach, the Aurora Driver can accurately detect and track small objects like pedestrians and motorcyclists beyond 400 meters in 3D. Here is our system in action: FirstLight Lidar starts to get a couple of lidar returns over 400 meters away on the side of the highway. Our long-range detector is able to correctly associate a single lidar point with the pedestrian in the ultra HD image provided by our cameras thanks to the extremely tight calibration of image and lidar information. The FirstLight Lidar returns also contain information about the velocity of the detected pedestrian, ensuring the truck has several hundred meters and enough time to make a safe decision—which, in this case, could be a lane change to create extra space while passing the pedestrian.

Our automatic, real-time miscalibration detection and recalibration capability helps ensure that the Aurora Driver’s perception system is consistently high-performing—a critical facet of operating safely on public highways at scale.

Related (06)

February 8, 2023

Introducing Au: Our open source C++ units library

January 23, 2026

Seeing with Superhuman Clarity: The Physics and Architecture Behind the Aurora Driver’s Perception System

January 17, 2020

Virtual Testing: The Invisible Accelerator

June 13, 2024

Aurora’s Verifiable AI Approach to Self-Driving

July 17, 2024

AI Transparency: The Why and How

July 26, 2024