Seeing with superhuman clarity

The physics and architecture behind the Aurora Driver’s perception system

When you’re operating an 80,000-pound truck at 65 mph, every extra meter of visibility matters. Longer detection range gives the Aurora Driver more time to react and more space to maneuver safely. Highway-speed autonomy demands perception that can consistently detect and track low-reflectivity hazards hundreds of meters ahead with performance that remains consistent even when lighting, road geometry, or weather make visibility difficult.

The Aurora Driver is built to meet those demands with exceptional long-range awareness. Its multi-sensor architecture combines our proprietary FirstLight Lidar, which currently sees more than 450 meters (our next-generation FirstLight detection range will extend even further to 1,000 meters), with imaging radar, high-resolution cameras, and tightly fused software layers — including Mainline Perception, the Remainder Explainer, and Fault Management.

Together, these components perceive the actors and obstacles that matter most to safe driving decisions, providing the redundancy and reliability essential for highway-speed autonomy.

The challenge: Why light, weather, and dynamics matter

The road is an unpredictable environment where visibility can deteriorate in an instant. Blinding glare from a low-horizon sun can wash out lane markings. Pitch darkness can cloak low-contrast hazards like an unlit disabled vehicle on the shoulder. While highway geometry is carefully engineered to maximize visibility, real-world driving still presents dynamic challenges. Traffic interactions and localized occlusions can produce “late reveal” actors — such as a vehicle cutting across traffic or a pedestrian stepping into view — that demand split-second detection and classification.

Weather adds another layer of complexity. In operational corridors like Texas and Arizona, fast-moving dust storms can reduce highway visibility to near zero in seconds. These aren't rare edge cases; validating performance in dust-storms was essential to unlocking our driverless lane from Fort Worth to El Paso, as well as preparing for our El Paso-Phoenix extension.

The Aurora Driver is designed to adapt to these dynamic shifts. When visibility drops due to dust or fog, it can quickly detect these new conditions and adjust its behavior, slowing down to preserve safe stopping distance and reaction time. If conditions reach a critical threshold, it will autonomously execute fallback behavior, such as exiting the highway or pulling over to the shoulder. Reliable perception turns these chaotic variables into a manageable operational envelope, ensuring confident performance even in the most challenging real-world conditions.

The hardware edge: Seeing farther and faster

To conquer the physics of highway driving, Aurora relies on a custom-integrated hardware suite anchored by FirstLight Lidar. Using Frequency Modulated Continuous Wave (FMCW) technology, FirstLight leverages coherent detection, allowing it to perceive faint signals through glare and low light more than 450 meters away. It measures velocity by relying on the Doppler effect, giving the Aurora Driver crucial data for ensuring smooth, safe reactions at speed.

This long-range precision is fused with imaging radar, which penetrates dense fog and rain to track motion where light cannot. High-resolution cameras add semantic details such as signage, lane markers, and critical actor attributes like emergency vehicles and traffic authorities. By overlapping the fields of view across these diverse sensors, and maintaining them with onboard sensor-cleaning systems, it builds a unified view of the Aurora Driver’s surroundings that remains reliable even when individual sensors are challenged.

Measuring velocity directly at long range reduces uncertainty before planning begins, enabling smoother lane-keeping and braking decisions well before a human driver would perceive the same hazard.

Software architecture: The layers of perception

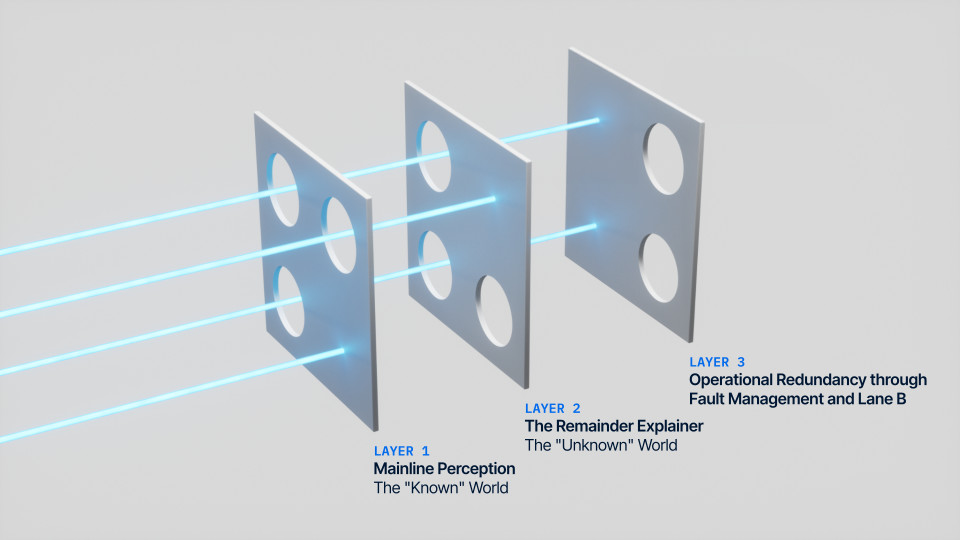

Hardware delivers the raw sensor data, but software makes sense of it. Aurora’s perception stack doesn't rely on a single algorithm or model to interpret the world. Instead, we follow a "Swiss Cheese" approach of multiple, overlapping software layers designed so that the strengths of one layer cover the potential limitations of another.

Layer 1: Mainline Perception (The "Known" World)

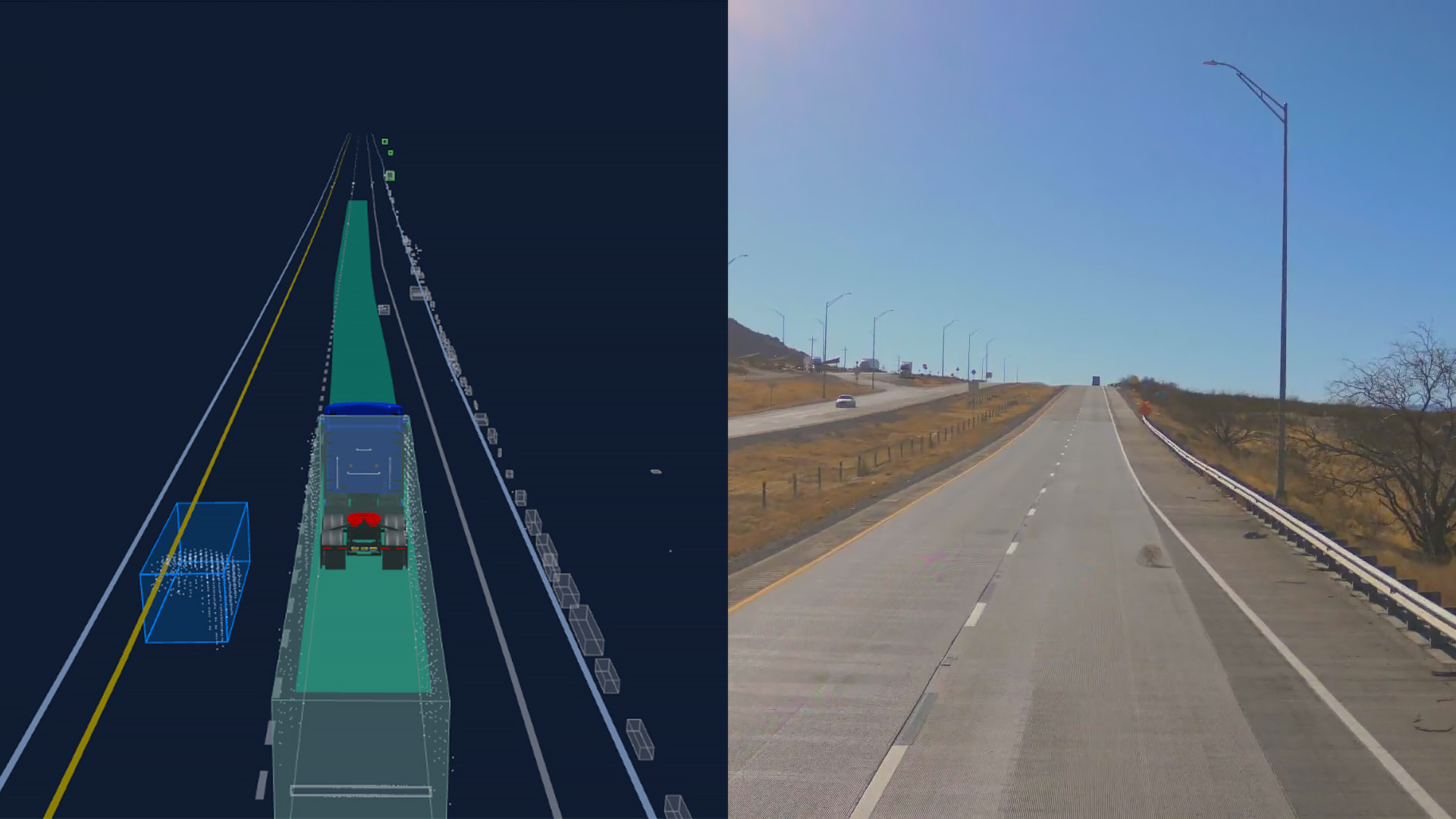

Mainline Perception is our primary interpretation layer. It fuses data from lidar, radar, and cameras to identify and track actors of known categories, such as vehicles, motorcycles, pedestrians, cyclists, and more. But detection is only the first step. The system also tracks each actor’s dynamics over time to understand intent and likely behavior. Our tracking system utilizes a neural network that operates directly on raw data from our entire sensor suite. It analyzes the rich history of lidar, radar, and image inputs for every object to precisely refine their current position and predicted movement.

What makes this tracking system unique is how it learns. At the core is a neural network we call S2A (sensor-to-adjustment), which operates directly on raw lidar, radar, and camera data — not just high-level detections. Every cycle, S2A focuses narrowly on each individual actor, pulling a localized “crop” of sensor data around that object and continuously refining its position, velocity, and orientation.

This process happens thousands of times per second, across every tracked object, without distraction. Where a human driver can only focus on a few actors at once, the Aurora Driver optimizes every relevant actor simultaneously — maintaining precise, stable estimates even at long range and high speed.

Layer 2: The Remainder Explainer (The "Unknown" World)

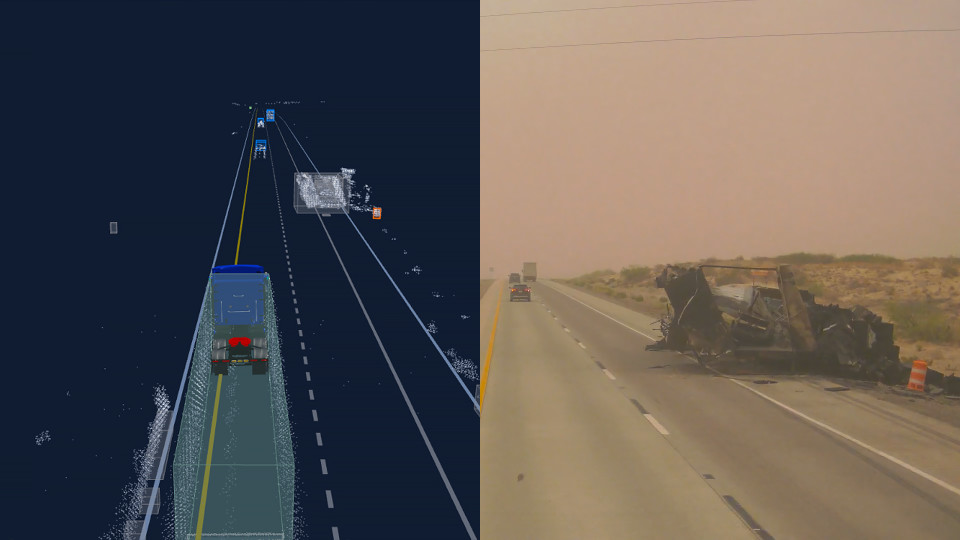

Real roads are messy. There is a large variety of objects that don’t fit neatly into a “car” or “pedestrian” category, such as a dropped couch, a detached tire, or livestock. If Mainline Perception cannot classify something, the system doesn't just ignore it. Instead, the Remainder Explainer analyzes the raw sensor returns — the “remainders” — and determines whether they represent a physical obstacle. But just knowing something is there isn't enough, as not all objects need to be avoided; swerving for a small plastic bag is unnecessary, while hitting a cinder block is dangerous.

To handle this nuance, the system calculates a machine-learned avoidance score based on factors like the object's dimensions, estimated material type, and motion. This allows the Aurora Driver to distinguish between hazardous obstacles that must be avoided and trivial debris that can be safely driven over if necessary.

The Remainder Explainer is built on the assumption that no training set — no matter how large — can capture every object the real world will produce. Rather than trying to enumerate the unknown, the system is trained for high recall, learning to explain any physical structure that appears in sensor data, even if it has never been seen before. This allows the Aurora Driver to reason about the presence and risk of novel obstacles without needing a predefined label.

In a recent on-road example outside El Paso, the Remainder Explainer detected a charred trailer partially blocking its highway lane — an object the system had never encountered during training. Rather than misclassifying or ignoring it, the system identified it as a physical obstacle, assigned a high avoidance score, and safely navigated around it. This ability to generalize — to respond appropriately to objects it has never seen — is critical for operating reliably at scale in an open world.

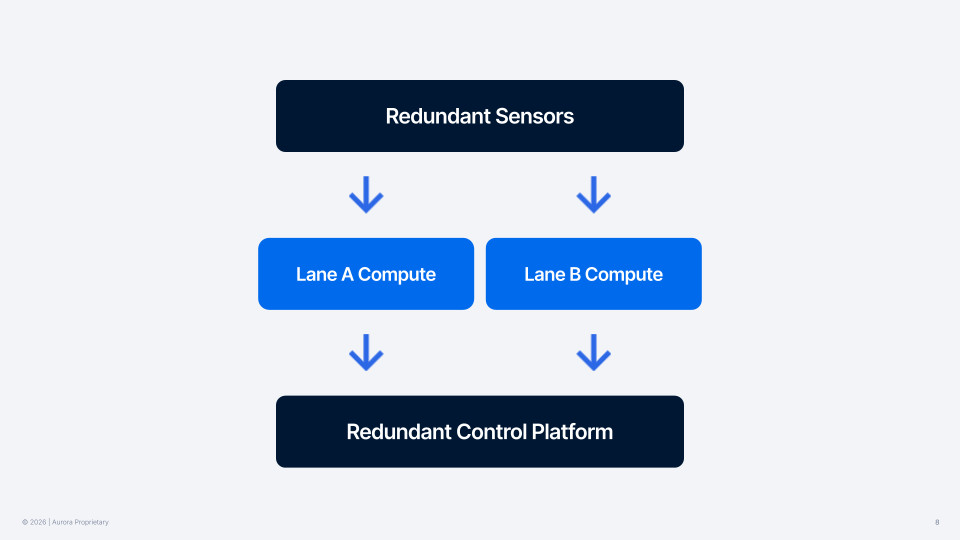

Layer 3: Operational Redundancy through Fault Management and Lane B

True operational readiness requires a system that manages its own failures as effectively as it navigates road conditions. The Aurora Driver’s Fault Management System (FMS) continuously monitors for software and hardware degradation, instantly flagging and handling issues before they become critical.

If sensors or computers fail, the vehicle cannot just come to a stop. It needs to continue perceiving the world in order to navigate off the highway or to a shoulder. For example, if a fault occurs in a construction zone, the system needs to continue driving through that construction zone until it can find an open shoulder to get out of the travel lanes.

There are a few ways the system handles such situations. Firstly, the system is trained to be robust to hardware issues where individual or multiple sensors may be lost. When that happens the perception continues to work to support the truck safely getting off the road, even in such degraded conditions when we may have lost part of our sensing suite. Moreover, in the case of a full compute failure, the redundant system design ensures that there is an independent backup computer that is available to take control and navigate to a pull off location.

Verifiable AI: Stress-testing every layer of perception

Superhuman perception doesn’t emerge from raw sensing power alone — it is trained, tested, and continually refined. Aurora’s Verifiable AI approach ensures that every perception layer performs safely and consistently across the full spectrum of real-world and hypothetical driving conditions.

At the foundation is a continually expanding, rigorously curated test suite drawn from across Aurora’s Operational Design Domain (ODD). This data set combines:

Real-world logs from on-road operations

Closed-track scenarios designed to capture rare or difficult-to-observe events

High-fidelity sensor simulation, essential for generating dangerous or extremely rare events (including reconstructions of scenarios from the NHTSA collision database)

Fault-injection tests where we simulate the loss of individual or multiple sensors or even an entire compute lane

These diverse test cases allow us to evaluate each perception component — Mainline Perception, the Remainder Explainer, and fallback behavior — against both observed and unobserved scenarios.

Validating perception isn’t only about confirming that each module performs as expected. Aurora tests the perception module at two complementary levels:

Module-Level Testing (Within Perception): These tests measure whether each perception sub-system correctly detects, classifies, and reasons about the world. For example, we evaluate how well the Remainder Explainer distinguishes hazards from benign objects, or how robust Mainline Perception remains when sensors are degraded. This includes explicit tests for sensor-loss conditions, ensuring the Aurora Driver can still perceive well enough to execute safe fallback maneuvers — even when operating with reduced sensing capability.

System-Level Testing (Beyond Perception): A small change within perception can have a large effect downstream. For instance, a minor shift in estimated long-range object position may change which side the system navigates around the object. To capture these cross-system impacts, Aurora runs full end-to-end tests with motion planning in the loop, evaluating how perception outputs influence the Aurora Driver’s behavior across a wide variety of operational conditions.

A key part of system-level testing is counterfactual (“What-If”) testing, which helps us understand how the system behaves even when unlikely events stack in unpredictable ways. We’ll explore this approach in greater depth in an upcoming blog focused entirely on Verifiable AI.

Together, Aurora’s module- and system-level tests form a comprehensive validation framework that challenges the Aurora Driver with scenarios ranging from the mundane to the extreme, and everything in between. This disciplined stress-testing ensures the system performs safely, predictably, and consistently long before it operates on public roads.

The foundation for safe, scalable autonomy

High-quality perception is more than a technical milestone — it’s the foundation that underpins safe autonomous driving. Each layer of Aurora’s perception system is designed to help the Aurora Driver understand the world with clarity, consistency, and confidence, even when conditions are at their most unpredictable.

Superhuman perception allows the Aurora Driver to detect and respond to its environment in a predictable, safe, and repeatable way. It’s what turns dust storms, darkness, glare, and fast-changing road dynamics into manageable operating conditions.

With superhuman perception, Aurora’s trucks don’t just see farther — they see the road more completely, reliably, and consistently than a human ever could. That capability powers every decision the Aurora Driver makes, forming the bedrock of safe, scalable, around-the-clock freight operations.

Related (06)

February 8, 2023

Introducing Au: Our open source C++ units library

January 17, 2020

Virtual Testing: The Invisible Accelerator

June 13, 2024

Aurora’s Verifiable AI Approach to Self-Driving

July 17, 2024

AI Transparency: The Why and How

July 26, 2024

AI Alignment: Ensuring the Aurora Driver is Safe and Human-Like

March 15, 2023